CLIP Paper read (Waiting for perfection)

CLIP Paper read

Preface

The paper is titled ‘Learning Transferable Visual Models From Natural Language Supervision’ and comes from the team at OpenAI.

You can find more information about it on the OpenAI website:

In this post I’ll briefly describe how CLIP works.

1. Structure

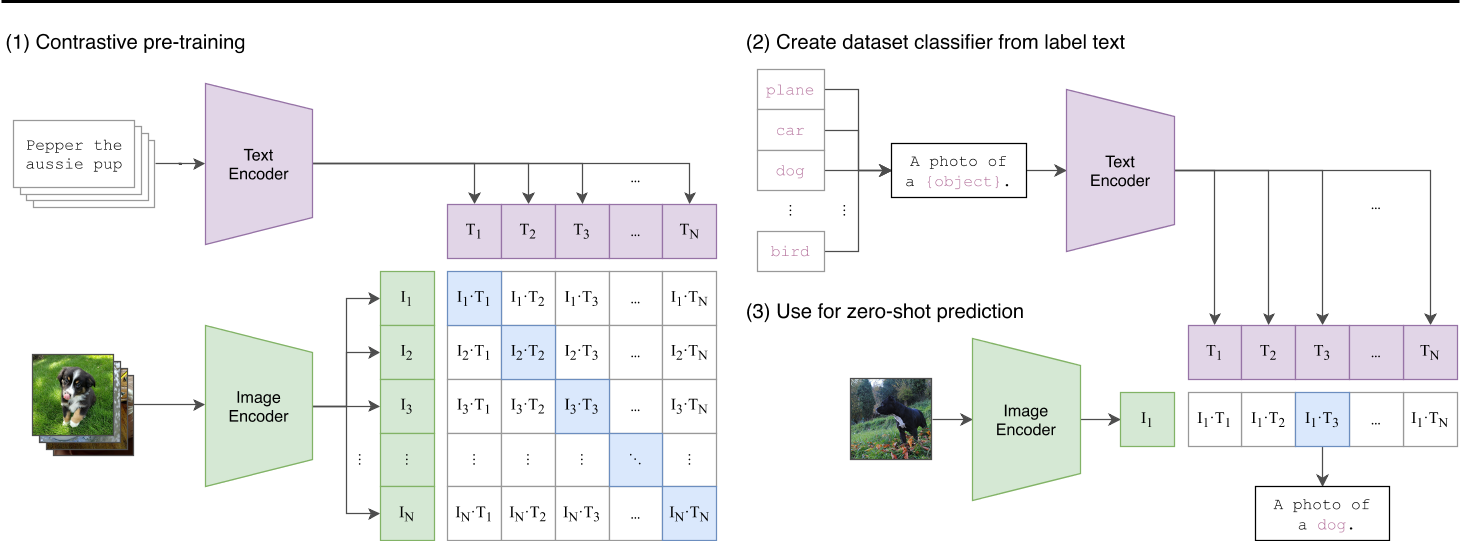

Figure-1 Structure (source: from paper)

2.

3.

本博客所有文章除特别声明外,均采用 CC BY-NC-SA 4.0 许可协议。转载请注明来自 Linermao's kiosk!